#Passing a JavaScript Value Between HTML Pages

Explore tagged Tumblr posts

Text

Passing a JavaScript Value Between HTML Pages

In this article, we are going to look at passing a JavaScript value between HTML pages. So basically we will have two HTML pages, and we will move some or information from one page to another using some basic methods. So this thing will be very much helpful when you try to create websites where you need to pass the data from one page to another. We will see this with very basic example and…

View On WordPress

0 notes

Text

REACTJS AT FULLSTACKSEEKHO

A Beginner’s Guide to React.js: Revolutionizing Web Development

React.js has taken the web development world by storm with its efficiency, flexibility, and scalability. If you’ve ever wondered how modern websites deliver such seamless user experiences, React is often the secret behind it. This blog will walk you through what React.js is, why it’s so popular, and how it simplifies building dynamic user interfaces.

What is React.js?

React.js, commonly called React, is an open-source JavaScript library created by Facebook. It focuses on building user interfaces (UIs), especially for single-page applications (SPAs), where users can navigate between different views without reloading the page. It follows a component-based architecture, allowing developers to break down interfaces into small, reusable elements.

Why Use React.js?

Reusable Components In React, every part of the user interface is a component. Once created, these components can be reused across different pages or sections, saving time and making code more maintainable.

Virtual DOM for Better Performance React utilizes a Virtual DOM to enhance performance. Unlike the traditional method of updating the actual DOM directly (which can slow down the page), the Virtual DOM only applies the necessary changes, making the UI faster and smoother.

Easy Data Management with One-Way Binding React follows a unidirectional data flow, meaning data moves from parent to child components. This structured approach helps avoid messy data management in complex applications.

Powerful Developer Tools React provides useful tools to inspect and debug components. The React

Developer Tools extension makes it easy to monitor how data flows through components and track changes efficiently.

Large Ecosystem and Community React has a vast community of developers, which translates to excellent documentation, extensive libraries, and rapid problem-solving support. Tools like React Router and Redux further enhance its capabilities.

Core Concepts of React.js

Components React applications are built with components, which are like individual building blocks that can function independently. Each component handles a part of the UI and can be reused multiple times.

JSX (JavaScript XML) JSX is a special syntax that lets developers write HTML-like code directly within JavaScript. This makes the code easier to understand and improves readability.

State and Props

State: Represents the dynamic data that controls how a component behaves. When the state changes, the UI updates automatically.

Props: Short for properties, these are values passed from one component to another. Props allow for communication between components.

React Hooks React introduced hooks to manage state and side effects in functional components. Popular hooks include:

useState: Manages state in a component.

useEffect: Runs side effects like API calls or timers when components render or update.

React Router For navigation within single-page applications, React Router helps manage routes, ensuring smooth transitions between different views without reloading the page.

How React Simplifies Development

React is built to handle the complexities of modern web applications efficiently. It provides:

Component-based architecture: Helps organize code logically and promote reuse.

Fast rendering with Virtual DOM: Makes updates smoother and improves performance.

Hooks for clean state management: Reduces the need for complex class-based components and makes code cleaner.

How to Get Started with React.js

To start with React, you’ll need Node.js installed on your machine. Once set up, you can use the Create React App (CRA) tool to quickly scaffold a React project with everything pre-configured. After installation, you can start building your components and styling your app as desired.

Best Practices in React Development

Keep Components Small and Focused Each component should ideally handle one part of the UI. Smaller components are easier to debug and maintain.

Use Hooks Wisely Avoid unnecessary use of hooks. For example, if a component doesn’t require state or side effects, you can skip them.

Consistent Folder Structure Organize your project by placing related files (like components, styles, and assets) in the same folder to keep things manageable.

Use Prop Validation It’s good practice to validate the props a component receives to prevent errors during development.

Optimize Performance Use tools like React.memo to prevent unnecessary re-renders, and load components dynamically using React.lazy to improve app performance.

Why React Continues to Lead the Market

React has a clear edge due to its simplicity, efficiency, and flexibility. It’s used by companies like Facebook, Netflix, and Instagram to build highly interactive user interfaces. The continuous updates and improvements to React’s ecosystem keep it relevant in the rapidly evolving world of web development.

Conclusion

React.js has revolutionized the way developers build web applications by offering a simple yet powerful way to create interactive UIs. Its component-based approach, Virtual DOM, and hooks make development faster and more efficient. Whether you’re working on a personal project or a large-scale enterprise application, React provides the tools needed to create responsive, high-performance interfaces.

If you are interested in building dynamic web applications, React is a great place to start. Dive in, explore its features, and experience how it can transform your development process!

Fullstack Seekho is launching a new full stack training in Pune 100% job Guarantee Course. Below are the list of Full Stack Developer Course in Pune:

1. Full Stack Web Development Course in Pune and MERN Stack Course in Pune

2. Full Stack Python Developer Course in Pune

3. full stack Java course in Pune And Java full stack developer course with placement

4. Full Stack Developer Course with Placement Guarantee

Visit the website and fill the form and our counsellors will connect you!

0 notes

Text

React js development

React.js is a popular JavaScript library for building user interfaces, particularly for single-page applications where UI updates are frequent.

React js development- Here are some key concepts and tips for React.js development:

Understanding React Components:

React applications are built using components, which are reusable and self-contained pieces of code.

Components can be functional (stateless) or class-based (stateful).

Use functional components whenever possible, as they are simpler and easier to understand. However, class components are still used for certain features like state management.

JSX (JavaScript XML):

JSX is a syntax extension for JavaScript, used with React to describe what the UI should look like.

It allows you to write HTML-like code in your JavaScript files, making it more readable and expressive.

State and Props:

State represents the internal data of a component that can change over time. Use the useState hook to manage state in functional components.

Props (short for properties) are used to pass data from a parent component to a child component.

Component Lifecycle:

For class components, there are lifecycle methods (e.g., component Did Mount, component Did Update, component Will Unmount) that you can use to perform actions at different stages of a component's life.

React Hooks:

Hooks are functions that let you use state and other React features in functional components.

Commonly used hooks include use State, use Effect, use Context, and use Reducer.

Managing State with Context API:

Context provides a way to share values (like themes, authentication status) between components without explicitly passing props through every level of the component tree.

Routing:

Use a routing library like react-router for handling navigation and creating a single-page application experience.

Forms and Controlled Components:

Use controlled components for forms, where the form elements are controlled by the component's state.

Styling:CSS-in-JS libraries like styled-components or traditional CSS can be used for styling React components.

State Management:

For more complex state management, consider using state management libraries like Redux or Recoil.

Error Handling:

Implement error boundaries (using component Did Catch or the Error Boundary component in React 16+) to catch JavaScript errors anywhere in the component tree.

Testing:

Use testing libraries like Jest and testing utilities provided by React (like @testing-library/react) to ensure the reliability of your components.

Optimizing Performance:

Use tools like React DevTools and follow best practices to optimize the performance of your React application. For more information visit us – wamasoftware

0 notes

Text

The Complete Guide of Google's Core Web Vitals: Increased SEO Ranking for Your Website

One of the most recent innovations that has caught the interest of SEO experts and website owners alike is Google's Core Web Vitals. These metrics have a big role in the user experience of a website and, consequently, its SEO ranking.

This guide, we will delve into the world of Core Web Vitals, helping you understand their importance and offering practical advice to improve the technical SEO of your website.

Understanding Core Web Vitals Core Web Vitals have become a crucial set of metrics in Google’s mission to provide users with the best experience possible. These metrics focus on three essential metrics determining the user experience: interactivity, visual stability, and loading performance.

Let’s examine each of these metrics in more detail:

Largest Contentful Paint (LCP) This metric assesses a page’s ability to load quickly. It measures the duration until the user can see the largest content element in detail. For a webpage that loads quickly, aim for an LCP of under 2.5 seconds.

First Input Delay (FID) Interactivity and responsiveness are measured by FID. This metric measures the amount of time that passes between a user’s initial interaction—such as clicking a link—and the browser’s response. The ideal target FID for a flawless user experience is lower than 100 milliseconds.

** From March 2024 onwards, Google is introducing a new metric, Interaction to Next Paint (INP), as the replacement for FID. INP will measure how quickly a webpage reacts by looking at information from the Event Timing API.

If clicking or doing something on a webpage makes it slow to respond, that’s not good for users. INP will check how long it takes for every click or action you do on the page and give a single value that will show how fast most of these actions were. A lower INP would mean the page usually reacts quickly to pretty much everything you do on it.

Cumulative Layout Shift (CLS) By tracking unexpected changes in the position of visual elements during page loading, CLS evaluates visual stability. The likelihood of annoying layout shifts is decreased by a CLS score of less than 0.1, which is regarded as excellent.

Optimising Google’s Core Web Vitals for Improved Technical SEO Let’s look at ways to improve Core Web Vitals for better technical SEO now that we know what the main ones are:

Prioritise Page Speed To minimise image size without sacrificing quality, compress the images and use the appropriate file formats. Utilise browser caching to save frequently used resources, thus cutting down on loading times for repeat visits. Delete unused characters, spaces, and line breaks from CSS, JavaScript, and HTML files by minifying them.

Effectively Load Critical Resources Make sure that images and videos only load as visitors scroll down the page by using slow loading for them. Distribute and improve third-party scripts to avoid them from blocking the main thread.

Optimise Server Response Times Select a dependable web hosting company that has quick server response times. Use Content Delivery Networks (CDNs) to spread website content among several servers, cutting down on latency.4. Minimise Browser Rendering Bottlenecks To avoid blocking other page elements, use asynchronous loading for JavaScript files. Put CSS files in the document head to allow the browser to render content gradually.

Prioritise Above-the-fold Content Make sure that the material that is displayed above the fold, or the area of the page that is instantly visible, loads quickly.

This will help to capture users’ attention and increase engagement.

Measuring and Monitoring Core Web Vitals Core Web Vitals must be regularly measured and monitored in order to be properly optimised. Consider the following:

Google PageSpeed Insights This tool gives you insights into how well your website is performing and highlights opportunities for improvement and optimisation with respect to Core Web Vitals and technical SEO.

Google Search Console To get precise suggestions for improvement, keep an eye on your Core Web Vitals data in the Google Search Console.

Enhancing Google’s Core Web Vitals through technical SEO optimisation is a crucial step to improving user experience and search engine rankings for your website and IKF will help you in this.

Partner with IKF, a top SEO agency in India, if you’re searching for a knowledgeable partner to achieve this goal. We are committed to assist you in every way we can. To learn more about our suite of services, contact us now!

FAQs

What are Google's Core Web Vitals? Google’s Core Web Vitals are a set of user experience metrics that measure loading speed, interactivity, and visual stability of a website. These metrics help assess and improve the overall performance and user satisfaction of web pages.

How do I make my website pass Google's Core Web Vitals? To ensure your website passes Google’s Core Web Vitals, focus on optimising loading speed, interactivity, and visual stability. This involves tasks such as compressing images, minimising code, leveraging browser caching, and prioritising above-the-fold content, all aimed at delivering a smoother and faster user experience.

What are the three pillars of Core Web Vitals? The three pillars of Core Web Vitals are loading performance, interactivity, and visual stability. These pillars are measured by metrics such as Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS) respectively, to assess the user experience and performance of a website.

How do you analyse Core Web Vitals? To analyse Core Web Vitals, you can use tools like Google PageSpeed Insights and Google Search Console. These tools provide insights and recommendations to measure and monitor metrics such as Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS) to improve the overall user experience and website performance.

How do I monitor Core Web Vitals in Google Analytics? Core Web Vitals data is not directly supplied by Google Analytics. However, you can access these statistics using Google Search Console’s Core Web Vitals report, which is integrated with Google Analytics and gives you information about the speed, usability, and stability of your website’s appearance.

0 notes

Text

What Is Web Scraping? – A Complete Guide for Beginners

In today’s competitive world, everybody wants ways to innovate and use new skills. Web scraping (or data scraping or web data extraction) is an automated procedure that scrapes data from websites and exports that in structured formats.

Web scraping is particularly useful if any public site you wish to find data from doesn’t get an API or offers limited access to web data.

In this blog, we will through light on this concept, and you will learn:

What is web scraping?

The fundamentals of web scraping

What is a web scraping procedure?

What is web scraping utilized for?

The best sources to know more about web scraping

What is Web Scraping?

Web scraping is a procedure of gathering well-structured web data in an automated manner. This is also recognized as web data scraping or data extraction.

Some critical use cases of web scraping consist of price intelligence, price monitoring, lead generation, news monitoring, and market research.

Usually, web scraping is utilized by businesses and people who need publicly accessible data to produce meaningful insights and make intelligent decisions.

If you’ve copied & pasted data from a site, you’ve done the same functions using any web scrapers; you manually go through a data scraping procedure. Unlike the tedious procedure of scraping data on your own, web scraping utilizes intelligent automation for retrieving hundreds, millions, or billions of data points.

Suppose you use a data scraper to find web data or outsource a web scraping project with a data extraction companion. In that case, you’ll have to understand more about web scraping basics.

Web Scraping Basics

A web data scraper automates the procedure of scraping data from different websites rapidly and precisely. The procedure is easy and has two steps: web crawlers and web scrapers. The data scraped gets delivered in a well-structured format, which makes it easier to study and utilize in the projects.

A crawler leads a scraper using the internet when it scrapes the requested data.

Web Scraping & Web Crawling – The Difference

Web crawlers or “spiders” are artificial intelligence that searches the internet for indexing and searching content by following links and searching. In different projects, you initially “crawl” the web or any particular website to find URLs you pass on to a scraper.

Web scrapers are designed to rapidly and precisely extract data from web pages. Different web data scraping tools differ extensively in design and complexity per your project.

A significant part of each web scraper is data locators, which are utilized to get data you need to scrape from an HTML file – commonly, CSS selectors, XPath, regex, or a grouping of them gets applied.

Understanding the differences between a web scraper and a web crawler will assist you in your progress with data scraping projects.

Web Scraping Procedure

Web scraping could be immensely valued for producing insights. Two ways are there to find web data:

Do It Yourself with web scraping tools

That is what a regular DIY web scraping procedure looks like:

1. Find the targeted website

2. Collect page URLs where you need to scrape data from

3. Make one request to URLs to find the HTML of pages

4. Utilize locators for finding data in HTML

5. Save data in the CSV or JSON file or any other well-structured format

You have to deal with many challenges if you want data at scale.

For instance, they maintain data scraping tools and web extractors if a website’s layout changes, organize proxies, perform JavaScript, or work with antibots. There are technical problems that use internal sources.

Different open-source data scraping tools are there that you can utilize; however, they have their limitations.

That’s one part of why different businesses outsource data projects.

Outsource Web Scraping Using Actowiz Solutions

1. Our team collects your requirements about the project.

2. Our expert team of data scraping specialists writes scraper(s) and sets the infrastructure for collecting structure and data depending on your needs.

3. Lastly, we deliver data in the desired formats and frequency.

Eventually, the scalability and flexibility of web data scraping make sure that project parameters, don’t matter how precise, can be fulfilled with ease.

Managers of e-commerce business intelligence inform retail units using competitor pricing depending on investors’ research, web-scraped insights, analyze investment opportunities, and marketing teams overcome the competition using deep insights, thanks to the growing adoption of data scraping as a fundamental part of daily business.

Web scraping outsourcing is the way of going for companies that depend on web data insights.

Why Outsource All Your Web Scraping Needs?

High-Quality Data : Data scraping providers like Actowiz Solutions have high-tech infrastructure, brilliant developers, and tremendous experience, ensuring correct and correct data.

Lower Cost : Finding web data from skilled providers could be expensive; however, outsourcing is the more profitable choice compared to creating an in-house infrastructure and hiring numerous engineers and developers.

Legal Compliances : You might need to be better aware of the dos and don’ts of scraping; however, a data provider with an internal legal team will surely. Outsourcing ensures you always be legally compliant.

Do you need to know more about how Actowiz Solutions’ web scraping services can add value to a scraping project? Contact us to know more!

What is a Web Scraping Tool?

A web scraping tool is a software program designed to scrape applicable data from sites. Use a data scraper to scrape precise datasets while collecting data from different websites.

A website scraper is utilized as a part of the web scraping procedure to make HTTP requests on any targeted website and scrape web data from pages. It analyzes publicly available and noticeable content to users and is reduced by a server like HTML.

Different types of data extraction tools and web scrapers like Actowiz Solutions’ Automatic Extraction have capabilities that can be customized for various data scraping projects.

The Importance of Scraping Data

Web scraping offers something valuable that nothing else can offer: it provides well-structured data from a public website.

With modern convenience, the real power of web scraping comes in its capability of building and powering some of the world’s most groundbreaking business apps.

‘Transformative’ doesn’t define how a few companies utilize web-scraped data to enhance their operations, notifying executive decisions to individual customer service experiences.

What are the Uses (Use Cases) of Web Scraping?

Alternative Finance Data

The decision-making procedure has never been informed, nor data are insightful, and the world’s top companies are progressively consuming web-extracted data provided its unbelievable strategic value.

Company Fundamental Estimation

News Monitoring

Public Sentiments Integration

Scraping Insights from Different SEC Filings

Brand Monitoring

In a highly competitive market today, defending an online reputation is the main priority. If you are selling products online and use a solid pricing policy which you have to apply or want to understand how people observe your products, brand monitoring having web scraping can provide you with this type of data.

Business Automation

In a few situations, it can be awkward to get data access. You may have to scrape data from the website, which is yours or your partner’s, in a well-structured way. However, there are no easy ways to do that, and this makes sense to make a scraper and grab the data. Compared with trying and working your way through complex internal systems.

Content & News Monitoring

If a company depends on sensible news analyses or frequently comes in the news, data scraping news data is the ultimate solution to monitor, aggregate, and parse the most important stories from this industry.

Competitor Monitoring

Investment Decisions Making

Online Public Sentiments Analysis

Political Campaigns

Sentiment Analysis

quick-commerce-in-2023-the-world-of-instant-delivery.php

Lead generation is an important marketing or sales activity for different businesses. In the HubSpot report 2020, 61% of the inbound marketers said that generating leads and traffic was their main challenge. Luckily, web extraction can access the well-structured lead list online.

MAP Monitoring

MAP (Minimum Advertised Price) monitoring is a standard practice for ensuring a brand’s online pricing is aligned with the pricing policy. With tons of distributors and resellers, it’s impossible to monitor prices manually. That’s why web scraping can be helpful, as you can observe your products’ pricing without lifting your finger.

Market Research

Market research is essential and must be determined by the most precise information accessible. With web scraping, you will get high volume, high quality, and compassionate web-extracted data of all shapes and sizes, fueling business intelligence and market analysis worldwide.

Alternative Finance Data

Competitor Monitoring

Market Pricing

Market Trends Analysis

Point Entry Optimization

Research & Development

Price Intelligence

Scraping pricing and product information from different e-commerce websites and turning that into intelligence is a significant part of contemporary e-commerce companies that must make superior pricing or marketing decisions depending on data.

Benefits of price intelligence and web price data include:

Brand & MAP Compliance

Competitor Monitoring

Dynamic Pricing

Product Trends Monitoring

Revenue Optimization

Real Estate

The real estate digital transformation in the last two decades threatens to disturb outmoded firms and make influential players in the business.

By including web-extracted product data in daily business, brokerages and agents can defend against top online competition and make well-informed decisions in the market.

Assessing Rental Yields

Evaluating Property Values

Examining Vacancy Rates

Understanding Market Directions

Some Other Use Cases

The boundless applications and methods for web scraping will continue. Web data extraction is broadly used for:

Academic Research, Real estate, etc.

Journalism, News, and Reputation Monitoring

Lead Generation and Data-Driven Marketing

Risk Management and Competitor Analysis

SEO Monitoring

FAQs

What is web scraping used for?

Web scraping is a procedure of utilizing bots for scraping data and content from a site. Unlike screen scraping, which only copies pixels displayed onscreen, data scraping underlying HTML codes and, with that, data gets stored in the database. A scraper can replicate the entire website content in another place.

Is web scraping legal?

Web scraping is legal if you extract publicly accessible data online. However, some types of data get protected by global regulations; therefore, be careful while extracting personal data, confidential data, or intellectual property.

What is a web scraping example?

Web scraping means data extraction into a more valuable format for the users. For instance, you might extract product data from an e-commerce site in an Excel spreadsheet. Though web scraping could be manually done, you could better do that using automated tools.

Why is Python used for web scraping?

You can do web scraping using different methods; however, many favor Python because of its easy usage, more extensive library collection, and easily understandable syntax. Web scraping is extremely important for business intelligence, data science, and investigative reporting.

Do hackers use web scraping?

A web scraping bot can collect user data from different social media websites. Then, by extracting websites that contain addresses with other personal details and relating the results, a hacker might engage in identity crimes like the submission of fraudulent applications for credit cards.

Does Amazon allow web scraping?

Web scraping will help you choose a particular data from the Amazon website in the JSON file or spreadsheet. You can even make that an automated procedure that runs daily, weekly, and monthly to update data constantly.

What is web scraping vs. API?

APIs are usually limited in the functionality to scrape data from one website (except they’re aggregators); however, you can find data from different websites with data scraping. Also, an API allows you to access a particular set of functions developers give.

Is web scraping easy to use?

A data scraper accurately and quickly automates scraping data from different websites. The data scraped is provided in a structured format to make that easier to study and utilize in the projects. The procedure is easy and works in two steps: a web scraper and a web crawler.

Which language is easiest for web scraping?

Python web scraping is the best option for different programmers creating a data scraping tool. Python is the most common programming language primarily because of its easiness and capability to deal with virtually any procedure associated with web scraping.

What is the Best Way to Use Web Data?

At Actowiz Solutions, we have worked in this web scraping industry for many years. We make data scraping very easy. We have provided scraped data for over 1,000 customers using our data scraping services. Our customers contact us so that they can exclusively focus on making intelligent decisions and making their products while we offer them quality data. If you need high-quality and timely data, then contact Actowiz Solutions now! You can also reach us for all your web scraping service and mobile app data scraping service requirements.

sources :

#web scraping services#Web Data Extraction Services#web scraping services usa#Data collection services

0 notes

Photo

How to Passing a JavaScript Value Between HTML Pages ☞ https://bit.ly/3aNJbAZ #html5 #css3

1 note

·

View note

Text

Php training course

PHP Course Overview

PHP is a widely-used general-purpose scripting language that is especially suited for Web development and can be embedded into HTML.

PHP can generate the dynamic page content

PHP can create, open, read, write, and close files on the server

PHP can collect form data

PHP can send and receive cookies

PHP can add, delete, modify data in your database

PHP can restrict users to access some pages on your website

PHP can encrypt data

With PHP you are not limited to output HTML. You can output images, PDF files, and even Flash movies. You can also output any text, such as XHTML and XML.

PHP Training Course Prerequisite

HTML

CSS

Javascript

Objectives of the Course

PHP runs on different platforms (Windows, Linux, Unix, Mac OS X, etc.)

PHP is compatible with almost all servers used today (Apache, IIS, etc.)

PHP has support for a wide range of databases

PHP is free. Download it from the official PHP resource: www.php.net

PHP is easy to learn and runs efficiently on the server-side

PHP Training Course Duration

45 Working days, daily 1.30 hours

PHP Training Course Overview

An Introduction to PHP

History of PHP

Versions and Differences between them

Practicality

Power

Installation and configuring Apache and PHP

PHP Basics

Default Syntax

Styles of PHP Tags

Comments in PHP

Output functions in PHP

Datatypes in PHP

Configuration Settings

Error Types

Variables in PHP

Variable Declarations

Variable Scope

PHP’s Superglobal Variables

Variable Variables

Constants in PHP

Magic Constants

Standard Pre-defined Constants

Core Pre-defined Languages

User-defined Constants

Control Structures

Execution Control Statements

Conditional Statements

Looping Statements with Real-time Examples

Functions

Creating Functions

Passing Arguments by Value and Reference

Recursive Functions

Arrays

What is an Array?

How to create an Array

Traversing Arrays

Array Functions

Include Functions

Include, Include_once

Require, Require_once

Regular Expressions

Validating text boxes,emails,phone number,etc

Creating custom regular expressions

Object-Oriented Programming in PHP

Classes, Objects, Fields, Properties, _set(), Constants, Methods

Encapsulation

Inheritance and types

Polymorphism

Constructor and Destructor

Static Class Members, Instance of Keyword, Helper Functions

Object Cloning and Copy

Reflections

PHP with MySQL

What is MySQL

Integration with MySQL

MySQL functions

Gmail Data Grid options

SQL Injection

Uploading and downloading images in Database

Registration and Login forms with validations

Pegging, Sorting,…..

Strings and Regular Expressions

Declarations styles of String Variables

Heredoc style

String Functions

Regular Expression Syntax(POSIX)

PHP’s Regular Expression Functions(POSIX Extended)

Working with the Files and Operating System

File Functions

Open, Create and Delete files

Create Directories and Manipulate them

Information about Hard Disk

Directory Functions

Calculating File, Directory and Disk Sizes

Error and Exception Handling

Error Logging

Configuration Directives

PHP’s Exception Class

Throw New Exception

Custom Exceptions

Date and Time Functions

Authentication

HTTP Authentication

PHP Authentication

Authentication Methodologies

Cookies

Why Cookies

Types of Cookies

How to Create and Access Cookies

Sessions

Session Variables

Creating and Destroying a Session

Retrieving and Setting the Session ID

Encoding and Decoding Session Data

Auto-Login

Recently Viewed Document Index

Web Services

Why Web Services

RSS Syntax

SOAP

How to Access Web Services

XML Integration

What is XML

Create an XML file from PHP with Database records

Reading Information from XML File

MySQL Concepts

Introduction

Storage Engines

Functions

Operators

Constraints

DDL commands

DML Commands

DCL Command

TCL Commands

Views

Joins

Cursors

Indexing

Stored Procedures

Mysql with PHP Programming

Mysql with Sqlserver(Optional)

SPECIAL DELIVERY

Protocols

HTTP Headers and types

Sending Mails using PHP

Email with Attachment

File Uploading and Downloading using Headers

Implementing Chating Applications using PHP

and Ajax

SMS Gateways and sending SMS to Mobiles

Payments gateways and How to Integrate them

With Complete

MVC Architecture

DRUPAL

JOOMLA

Word Press

AJAX

CSS

JQUERY (Introduction and few plugins only)

1 note

·

View note

Text

Is safari or chrome e

#IS SAFARI OR CHROME E HOW TO#

#IS SAFARI OR CHROME E ANDROID#

Read on below as I cover in more detail on what sets Safari and Google Chrome apart from each other, and which might be the better web browsing option for your devices. There are also a range of features that makes Safari and Google Chrome different from each other, as well as a few that are quite similar. Google Chrome is also a web browser like Safari, but differs in that it is owned and operated by Google. Google is a search engine that is powered by Google under parent company Alphabet, and can be used within the Safari web browser. Safari is a web browser that is owned and operated by Apple. Safari and Google are two of the most recognized browsing and search engine platforms on the entire internet.

#IS SAFARI OR CHROME E ANDROID#

This is done for all the following tests.Owning an iPhone, Android smartphone, Mac or PC, you most likely have come across the words “Safari” and “Google” at some point in time. This value is passed to indexOf() method to detect this value in the user-agent string.Īs the indexOf() method would return a value that is greater than “-1” to denote a successful search, the “greater-than” operator is used to return a boolean value on whether the search was successful or not.

Detecting the Chrome browser: The user-agent of the Chrome browser is “Chrome”.

The presence of the strings of a browser in this user-agent string is detected one by one. The user-agent string of the browser is accessed using the erAgent property and then stored in a variable. If the value does not come up in the string, “-1” is returned. The indexOf() method is used to return the first occurrence of the specified string value in a string. The presence of a specific user-string can be detected using the indexOf() method. This user-agent string contains information about the browser by including certain keywords that may be tested for their presence. The userAgent property of the navigator object is used to return the user-agent header string sent by the browser. The browser on which the current page is opening can be checked using JavaScript.

Differences between Procedural and Object Oriented Programming.

Top 10 Projects For Beginners To Practice HTML and CSS Skills.

Must Do Coding Questions for Product Based Companies.

Practice for cracking any coding interview.

Must Do Coding Questions for Companies like Amazon, Microsoft, Adobe.

#IS SAFARI OR CHROME E HOW TO#

How to add whatsapp share button on a website ?.Send unlimited Whatsapp messages using JavaScript.Project Idea | Automatic Youtube Playlist Downloader.Project Idea | Sun Rise/Set Time Finder.How to create hash from string in JavaScript ?.How to check the user is using Internet Explorer in JavaScript?.How to detect the user browser ( Safari, Chrome, IE, Firefox and Opera ) using JavaScript ?.How to detect browser or tab closing in JavaScript ?.How to close current tab in a browser window using JavaScript?.Javascript | Window Open() & Window Close Method.How to open URL in a new window using JavaScript ?.Open a link without clicking on it using JavaScript.How to Open URL in New Tab using JavaScript ?.ISRO CS Syllabus for Scientist/Engineer Exam.ISRO CS Original Papers and Official Keys.GATE CS Original Papers and Official Keys.

0 notes

Text

Normal Expressions With JavaScript

advent

regular expressions (RegExp) are a very useful and powerful part of JavaScript. The purpose of a RegExp is to determine whether or not a given string fee is valid, based on a set of rules.

Too many tutorials don't really educate you the way to write ordinary expressions. They certainly provide you with a few examples, most of which might be unluckily very tough to understand, after which never give an explanation for the details of ways the RegExp works. in case you've been pissed off through this as nicely, you then've come to the right vicinity! these days, you're going to virtually discover ways to write everyday expressions.

To find out how they work, we are going to begin with a completely simple example after which usually upload to the rules of the RegExp. With every rule that we upload, i can provide an explanation for the brand new rule and what it provides to the RegExp.

A RegExp is clearly a fixed of regulations to decide whether a string is valid. permit's count on that we've got an internet page where our customers enter their date of delivery. we can use a JSON Formatter ordinary expression in JavaScript to validate whether or not the date that they input is in a valid date layout. after all, we don't need them to enter some thing that is not a date.

Substring search

initially, allow's write a RegExp that requires our customers to go into a date that includes this cost: "MAR-sixteen-1981". word that they can input something they need, so long as the cost consists of MAR-16-1981 someplace within the value. for instance, this would also be legitimate with this expression: take a look at-MAR-16-1981-greater.

below is the HTML and JavaScript for a web page with a shape that lets in our customers to go into their date of birth.

characteristic validateDateFormat(inputString) {

var rxp = new RegExp(/MAR-sixteen-1981/);

var result = rxp.test(inputString);

if (end result == false) {

alert("Invalid Date format");

}

return end result;

}

be aware of the following key pieces of this code:

1. when the post button is clicked, our JavaScript function is called. If the price fails our take a look at, we display an alert pop-up container with an blunders message. on this state of affairs, the characteristic also returns a fake value. This guarantees that the form doesn't get submitted.

2. note that we're the usage of the RegExp JavaScript item. This item provides us with a "check" method to determine if the cost passes our ordinary expression rules.

precise suit search

The RegExp above lets in any cost that consists of MAR-sixteen-1981. subsequent, permit's expand on our regular expression to best allow values that same MAR-16-1981 precisely. There are matters we need to feature to our normal expression. the primary is a caret ^ at the start of the everyday expression. the second one is a dollar signal $ on the quit of the expression. The caret ^ calls for that the value we are testing cannot have any characters preceding the MAR-sixteen-1981 value. The dollar signal $ requires that the cost can't have any trailing characters after the MAR-sixteen-1981 value. right here's how our regular expression seems with those new rules. This ensures that the price entered have to be exactly MAR-16-1981.

/^MAR-sixteen-1981$/

man or woman Matching

we have already discovered a way to validate string values that either contain a certain substring or are equal to a sure price with a normal expression. however, for our HTML shape, we likely do not want to require our clients to enter an actual date. it's much more likely that we need them to go into any date, as long as it meets positive layout regulations. to start with, let's have a look at editing the RegExp in order that any 3 letters may be entered (not just "MAR"). let's additionally require that these letters be entered in uppercase. here's how this rule seems whilst added to our everyday expression.

/^[A-Z]{3}-sixteen-1981$/

All we've carried out is changed "MAR" with [A-Z]{three} which says that any letter between A and Z may be entered three instances. allow's examine a few versions of the character matching rules to learn about a few different approaches that we are able to in shape patterns of characters.

the rule of thumb above simplest allows 3 uppercase characters. What if you desired to permit 3 characters that could be uppercase or lowercase (i.e. case-insensitive)? here's how that rule could appearance in a regular expression.

/^[A-Za-z]{3}-sixteen-1981$/

next, let's alter our rule to allow three or extra characters. The {3} quantifier says that precisely three characters need to be entered for the month. we will allow three or extra characters via converting this quantifier to {3,}.

/^[A-Za-z]{3,}-16-1981$/

If we want to allow one or greater characters, we will virtually use the plus-signal + quantifier.

/^[A-Za-z]+-sixteen-1981$/

degrees

till now, we've got exact degrees of letters [A-Z] and numbers [0-9]. There are a selection of techniques for writing stages inside everyday expressions. you could specify more than a few [abc] to fit a or b or c. you can also specify a number of [^abc] to healthy anything that is not an a or b or c.

1 note

·

View note

Text

I “LIKE” programming

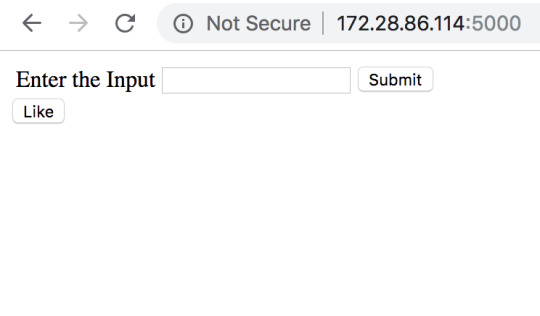

Well just like this blog, when it came to the like system, I wasn’t entirely sure where to start. I had started working on this before we had decided to go with AR built in Unity so I figured I would get the mechanics working and then be able to transfer them into Unity. Initially when we were thinking of building a physical model or projection mapping, I was going to have the like button on a webpage so that anyone could access it, an inherently thats where I started. I had the thought of using a python script to build the whole back end mechanic of the system and that data would be passed from the user via the webpage, and after quickly running this by Liam (who has done something similar before) he agreed that it would be the easiest way and we could have multiple users at once which is what we wanted. I built the most basic version of the “front end” part so that someone could input their name and then like it so the likes would be attributed to them, just like it is on social media.

Liam recommended using the flask module for Python to host the server (and webpage for testing). Once I had installed that I managed to get a connection between the two and even by complete fluke was getting it to receive data from multiple devices and be able to register that it was from different devices. I personally was stoked about this because it was 90% of the like system working but then Liam pointed out, as we don’t know why it worked, if it stopped working we also wouldn’t be able to pick out why. I figured it was in my best interests to learn the best practice for this rather than get the minimum working version as it would be great reference material for the future. In these early stages, Liam helped me a lot by explaining why one way was better than another and generally guiding me through which was super helpful as I was almost coming from no coding background. The main think I am super grateful for is him introducing me to JSON, which I’ll be honest was an absolute pain at first but I now realise it was definitely the best way to go as it was far more reliable and actually made sense. So essentially what was happening is that the HTML page was passing the name that was submitted by the user, to the python script every time the like button was clicked. The script would then add a new value to its own defined variable “like” and count them like that. The bonus of doing it like this is that if someone played once and then after a while came back too have another go, their “score” would compound and keep increasing. On that note though the downside is that if two users input the same name they will have a super score rather than two separate scores. While this would be a downside for an actual game where the score would need to be counted from just one game at a time and not an overall total, for our project it sort of just adds to it. The idea is that the more people “like” the disaster that happens, the more it proves our point that social media is building a screen which separates people from reality and that they can simply click a button to show their support, and the more they do that, the more they are helping? Or at least thats the theory.

In the past when it has come to doing small code projects I have looked up how to do something, found code that suited my need, copied it and then tweaked it to properly fit my programme. However, this doesn’t mean I necessarily understood it which is something I wanted to do differently this time. As this was basically my main role in the team, I wanted to make sure I would be able to explain to other people how it worked rather than have a piece that worked but just because it did. Which I guess relates to this whole degree itself, nothing is really taught to us (especially in studio) and its all self directed so we need to learn the skills ourselves and in this day in age when you don’t need a computer science degree to become a developer but simply be able to copy code from different places to suit your programme, I believe it is quite important to know what you are doing because I the future it will make me far more efficient. And its the learning by doing thing that works really well for me that I like. For example when it came to having to deal with GET requests from both the server and user side, I had done something similar before with the instagram location finder programme that I had had a play with earlier in the semester, and because I had used it before I sort of knew how it worked but then I had the code itself I could look through again and figure out why it worked and what parts I could omit for this different programme. I have to say, I think this semester I have learned more about programming from simply trial and error, Liam and online tutorials than I did in all of last year where I did both programming papers. It was also great to learn this in a group setting where the other team members also are not super coding savvy as trying to explain what I was doing or why I couldn’t do something that we wanted to do due to the nature of the system that I was writing in non technical terms helped clarify what I was doing for myself. I would certainly like to keep doing more programming projects in the future to further develop my skills and learn new things.

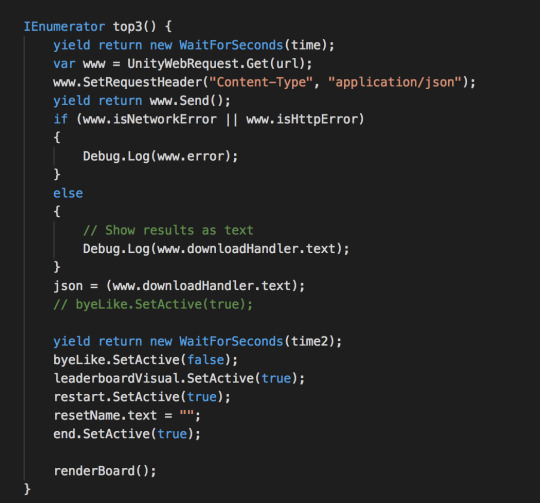

Transferring the user side to Unity was a whole different box of frogs though. First of all Unity is all scripted in C# which I have never used before (but luckily is similar to java which I had dabbled in with processing last year) but the whole mechanic of connecting to a server is made a lot more difficult as all requests need to be sent through a Coroutine. Which I’ll be honest, I had never heard of until I stumbled across a million Stack Overflow forums that explained that that is what you have to use. I think I have got my head around them mostly but there are still some things about them that elude me, for example everywhere (including the Unity manual) it says that they end when they hit a “return”, but I have coroutines that have functions or call new functions still enclosed in the coroutine but after the return type and it still calls them (see below).

They also supposedly run for their specified length (of time) regardless where they are called, i.e will run for 6 seconds if told to after being called from the Update( ) function. However, I have found that in some of my code this works, in other parts it just sort of ignores that rule and sometimes it waits for a wee while longer than the instructed time. So its not totally something that I fully understand but I know it enough to be able to use it in the right places and the majority of the function of the Unity scene is run by coroutines so I am clearly doing something right. I like to think of it as a poorly trained animal, I know the commands and the animal knows what they mean but sometimes chooses to do its own thing.

Another major challenge I had was dealing with JSON, for starters sending the exact same data as I was from the webpage but through unity, it was as if it was parson the data into JSON and then parsing the JSON into more JSON even though the commands where almost the same, so on the python side I had to update it so that it decoded the incoming JSON properly so it was then actually processed logically. This brought in a whole world of problems in itself as then the data that was being sent back to the user was all jumbled and messed up which was less than ideal as it felt I had actually gone backwards rather than made progress. But as I have learned from this project, every new error you get means that you are making progress as you have made it past the previous error. After all of that though, I did get it working.

vimeo

Once I had done all of that, I realised that it was a perfectly functioning Like system that recorded multiple user’s scores and could compound them and it was perfect, but only once. There was no way to restart it without stopping the whole application and going again. So there was the next challenge which proved a lot more difficult that initially suspected mainly due to how referencing different Game Objects is from a script and even harder, referencing the scripts of those Game Objects which is what caused me many hours of strife. To make matters more difficult as well, Unity doesn’t appreciate all the things you could normally do in a C# script so you have to find ways around its very stubborn walls which in my case lead to many, many scripts. Doesn’t sound like such a terrible thing but to make sure each was working correctly I was logging things to the debug console from almost every script so then when I wanted to look for a specific message I was sending to try and get something working, it became a whole lot harder because there were 20+ scripts that were all printing to the console. But once again, I am a champion and figured it out.

vimeo

As I write this I realise that it doesn’t sound that difficult and didn’t take that long but the code for the like system has been haunting me at night and has been an ongoing saga for weeks . I certainly have learned heaps and am happy with my process that I undertook. However, if I was to do it again knowing what I know now, I would skip out the HTML part and just go straight for the C# scrips because I think that transition was the hardest part of this whole process. In HTML (with javascript) it is easy to do everything all in on document but with C#, you can’t send data and also receive it in the same script, you need to separate them into multiple scripts which is where confusion starts manifesting as you try and think across multiple scripts and have to remember what each one is referencing and how it will then affect the other one. The issue with backend systems as well is you don’t overtly see your mistake as you would with front end because either something happens or it doesn’t and if it doesn’t you then have to comb through your code to find the broken section and find out what is messing you up. Overall I think this is what I found the most frustrating as I have only really done mainly front end development rather than back end, but this project was certainly a great insight into it. Now as for this whole project once we got on a roll, I obviously stopped blogging as I got so engrossed in the work which I do understand is not the best practice but as we were pushed for time (having left it so late to start doing something) I focused solely on getting the work done and from an outsider’s perspective the final product doesn’t really show how much work went into the programming of it, so I have uploaded all my scripts to GitHub so you can have a look at them, and also see how things work if you are interested: https://github.com/JabronusMaximus/ARcde-Scripts

1 note

·

View note

Quote

7 Steps to Score Perfect 100/100 in Google Page Speed Score

There’s nothing as hectic as trying to find an ideal balance between user experience and a fast google page speed score, since page speed is one of the key factors in an SEO, achieving a perfect score of 100/100 needs a well-arranged formula even though each website follows a different approach when it comes to the formula. Various tests need to be done thereof and the best way is performing the tests is on a staging/development site and then you transfer them on your live website. The best websites are built using WordPress, which runs the latest WordPress built with a salient theme.

Here are the tips to score a perfect ton in your page insight from best Web development Agency Mumbai

1.Css and JavaScript Reader Blocking.

This involves two steps, the first load the <head> element of the HTML by inlining it within the <style> and <script> tags and this is done only after you have first identified the key parts of your CSS and JS files which are very vital in rendering.

Secondly allow defer loading or asynchronous loading of the non-critical elements. A good example is when you use popups on a website, it tends to appear after a span of time when a user lands on your website and thus you should be able to separate your CSS and JS on a different file and load it in such a way that any non-critical CSS as well as google fonts can be defer loaded by using a snippet code.

2.Minify Your HTML

This in another excellent way of achieving a perfect score in google search since there are a lot of plugins that go along with minification of CSS and JS files, the ones that are commonly observed are autoptimize and Better WordPress Minify but word WordPress is the best. Html coding eats up a lot of space and these heavy junk files ends up slowing the rate at which a page takes to load.

It is important you delete any unnecessary and duplicated data to increase the google search speed. Several plugins such as Html Minify, Better WordPress Minify can be of great essence in minification and are available to help you fix that without affecting the browser.

3.DNS.

If you are considering using a shared hosting environment, cloud flare will do you good since its speed optimization is a bit slower except for those with dedicated server which works best when everything is switched off. you can consider using hosting providers as an alternative, they include WP Engine, Press labs and Pantheon which come with built-in servers and CDN configuration. This you can do if you have no skills or have insufficient time to install a cloud flare.

4. avoid too many plugins

The more the plugins the slower a site is, if you often use CMS like joomla, WordPress etc., this means you should definitely avoid using many plugins. Always consider deleting and disabling plugins that are unnecessary. This you can achieve by doing tweaks using custom coding rather than installing monster plugins which load 2-3 JS and CSS files, you can just copy and paste to avoid installing plugins.

5. Optimize Images.

There are several ways to fix optimization issues which have filled almost all website. They include.

a) One, you can install a server add- in as recommended by google.

b) Using APIs, such as Tinypng, Short Pixel or Kraken as a third party choice.

c) Using a PHP built in library ImageMagick.

6.Enable Compression

One of the key hindrances for a fast page speed is images, that’s why it’s always advised for you to have a better mobile phone experience that you delete or hide any image that adds no value to your phone. In doing this you will actually don’t need another plugin, you’ll just use the one you have and you will notice that a lot of elements will be catched using the GPIZ.

7.Passing the Server Response Time.

The recommended benchmark server response time by google is 0.2 seconds and nothing else above that. If you are running a website on a high spec dedicated server which has got a number of accounts, most of what you read online will always suggest you probably switch to a faster WordPress host which actually is even simpler, but if you are intending to stay on your environment you can switch off completely any services running on the server and may not be useful so as to increase the speed of the server, even though it does not make a significant difference.

The biggest difference you can notice to reduce the server response time is when you switch to PHP7 even though on the other hand not all hosts support it.

Statistics has shown a 20 to 50 percent speed increase in switching to PHP7.Many people don’t know which version of PHP they run, but the good thing about this plugin is that when you install it, you will see it clearly at your admin dashboard. What makes it best is the fact that it allows no room for mistakes. You can download a PHP compatibility checker at WordPress which scans through your code and identifies any challenge to switching to PHP7 to see if your site is compatible.

If you are still using the WHM you can switch to the “multi PHP manager”, all you need is to select the site you want to change PHP version. If you do not have access to a WHM you can always ask for one from your favorite server admins, but make sure you are doing it from a test/development environment.

Conclusion.

Achieving a high google speed score is the key to SEO in the todays world, owing to the fact that there is a tremendous increase in the use of mobile devices but most people actually overlook and consider this as a minor technical aspect.

You should not shun away from bringing change around your environment but on the contrary set yourself to test several variants of code on your website and aim at getting a whole 100/100-page score speed.

1 note

·

View note

Text

5 Reasons Why You Cannot Learn Web Design Well.

A Beginner's Guide To CSS CSS is vital if you're interested in the World Wide Web. It is among the most essential technologies for the JavaScript web and World Wide Web. CSS is an expression of stylesheets that determines the way HTML documents should appear. There are many different styles that can be applied to HTML documents, each with its own distinctive look and feel. For more information about CSS take a look! This article will give you an overview of how CSS operates and how you can use it to improve the performance of your websites. A Norwegian programmer Hakon Wium-Lie, who was a close associate of Sir Tim Berners Lee, the inventor of HTML and World Wide Web, first established CSS in 1996. Hakon Wium Lie first proposed the idea for cascading style sheets in 1994. Other style sheet languages were also proposed at that time, however CSS was first adopted by the W3C in 1996. It was widely used and became a standard for web design. CSS provides you with more control over the presentation. You can control the spacing between paragraphs as well as background images. These features make it simpler to create visually appealing web pages. CSS is also simple to master, so even if it's your first time using it is worth taking the time to learn the basics. You can start your journey with CSS by downloading a no-cost trial version and learning more. Once you've used it you will be able to modify the appearance and functionality of your website. In 1996 the year 1996, the W3C released the first version of CSS. The next year, the World Wide Web Consortium added CSS to its editorial review board. In 1995, Netscape presented JavaScript Style Sheets. The W3C was interested in the development of CSS. It's also an increasingly popular option for adding new features to websites. It is possible to create more complex web pages simply using the latest version of CSS. If you want to learn more about CSS take a look at the free guide. The CSS specification defines how to apply styles. The CSS specification alters the appearance of an element once a user selects it. A user agent can only select an element if its name matches the attribute. A pseudo-element, on the other hand is an element that has an effect on an entire element. This means that a user may click on an element and view a specific area of the page. CSS is a different important standard for web. CSS lets web designers create pages that have a consistent style. It is possible to create a page using a single style CSS. Many developers have developed mobile-friendly websites because of its popularity. The technology is not just superior to its competitors but it has become a necessity for web designers. These tools enable you to build mobile-friendly websites. The future is now. Inheritance is one of the most important features of CSS. The characteristics of an ancestor's ancestor may be passed on to descendents, for example, color and font. For example font size is a factor that can be passed on to descendants of the same element. Inheritance allows a descendant element the option of inheriting a parent's property values. This can be extremely beneficial in web design. If you're using a brand new kind of text on your site You can make it appear similar to the original. Another benefit of CSS is that it can save time. CSS can apply a style for all HTML elements, unlike HTML. Changing a single element's style in CSS will affect all other elements. Your website will load faster. With CSS, you can make global modifications to your Web pages in a snap. You can use the same style across different pages. This is extremely useful for websites with multiple sites. CSS has several selectors: id class, id, as well as a value. The id will provide an element's link. The value of the property is an integer. It should be between 0 & 255. The value of the property should be capitalized. Although they don't affect the browser's appearance, inline styles can affect how elements are displayed on a webpage.

0 notes

Text

Five Advices That You Must Listen To Before Learning Web Design.

The CSS Beginner's Guide CSS is essential if you are interested in the World Wide Web. It is a vital technology for JavaScript web and the World Wide Web. CSS is a stylesheet that defines how HTML documents should look. There are many styles that can be applied HTML documents. Each style has its own unique look and feel. You can find more information on CSS here. This article will provide an overview of CSS and show you how to use it to increase the performance of your website. Hakon Wium Lie, a Norwegian programmer, was a close friend of Sir Tim Berners Lee who invented HTML and World Wide Web. He established CSS in 1996. In 1994, Hakon Wium-Lie proposed the idea of cascading stylesheets. While other style sheets languages were also suggested at the time, CSS was adopted by W3C in 1996. It became a common style sheet language and a standard in web design. CSS gives you more control over your presentation. You can adjust the spacing between paragraphs and background images. These features make it easier to create visually appealing web pages. CSS is easy to use, so it's worth spending the time to get to know the basics. Start your CSS journey by downloading the free trial version. After you have used it, you can modify the appearance and functionality on your website. The W3C published the first CSS version in 1996. In 1996, the World Wide Web Consortium released the first version of CSS. The World Wide Web Consortium also added CSS to its editorial board. Netscape introduced JavaScript Style Sheets in 1995. The W3C was very interested in CSS development. It is also a popular way to add new features to websites. You can create complex web pages with the latest CSS version. The free CSS guide will help you learn more. The CSS specification describes how styles can be applied. Once a user selects an element, the CSS specification changes its appearance. The element can only be selected by a user agent if the name matches the attribute. A pseudo-element is an element with an effect on all elements. A user can click on an element to view a particular area of the page. CSS is another important standard for web. CSS allows web designers to create consistent pages. A single style CSS can be used to create a page. Because of its popularity, many developers have created mobile-friendly websites. This technology is not only superior to other competitors, but it has become a necessity in web design. These tools allow you to create mobile-friendly websites. The future is now. CSS's most important feature is its inheritance. Ancestors' characteristics, such as color and font, can be passed on to descendants. A factor such as font size can be passed to descendents of the same element. A descendant element can inherit the parent's property values through inheritance. This is a great benefit for web design. This can be very helpful if you use a new type of text for your website. Another advantage of CSS is its ability to save time. CSS can be used to apply the same style to all HTML elements. HTML cannot do this. CSS can change the style of any element. Your website will load quicker. CSS makes it easy to make changes to all pages. The same style can be used across multiple pages. This is especially useful for sites with multiple websites. CSS can be used with several selectors, including id class and id as well as a value. An element's link will be provided by the id. The property's value is an integer. It should be between zero and 255. Capitalizing the value of the property is a good idea. Inline styles aren't able to alter the browser's appearance but can influence how elements appear on a webpage.

0 notes

Text

15 Web Design Tips From Web Design Experts.

A Beginner’s Guide To CSS CSS is essential for anyone who is interested in the World Wide Web. It is an essential technology for JavaScript web, and the World Wide Web. CSS is a collection of stylesheets which determine the appearance of HTML documents. There are many styles that can applied to HTML documents. Each one has its own look and feel. Check out CSS to learn more! This article will provide a brief overview of CSS and explain how it can be used to enhance the performance of websites. Hakon Wium Lie was a Norwegian programmer who was a close ally of Sir Tim Berners Lee. The inventor of HTML and the World Wide Web, CSS, was first established in 1996 by Hakon Wium Lie. Hakon Wium Lie was the first to propose the cascading styling sheets idea in 1994. Although other style sheet languages were suggested at that time too, CSS was the first to be adopted by W3C. It was widely adopted and became a standard for web designing. CSS offers more control over presentation. You can set the spacing between paragraphs, as well as the background images. These features make it easy to create visually appealing websites. CSS is very easy to learn. Get a free trial of CSS and start learning. You can customize the look and functionality of your site once you have it installed. 1996 saw the release of the first CSS version by the W3C. The World Wide Web Consortium made CSS available to its editorial committee the next year. Netscape released JavaScript Stylesheets in 1995. The W3C is interested in CSS development. It is also becoming more popular for adding new features on websites. You can create more complex web pages using the latest CSS. Check out the free CSS guide to find out more. The CSS specification describes the way styles are applied. Once an element is selected by a user, the CSS specification changes its appearance. If the element's name matches its attribute, a user agent cannot select it. A pseudo-element, however, is an element that has an affect on an entire element. This means that users can click on an item to view a certain area of the webpage. CSS is another important standard in web. CSS allows web designers make pages with a consistent style. A page can be created using one style CSS. It is a popular technology that has led to many developers creating mobile-friendly sites. It's not only better than its competitors, but it is a necessity for web developers. These tools can be used to build mobile-friendly web sites. The future is now. CSS has one of its most important features: inheritance. Descendants may be able to pass on the characteristics of an ancestral ancestor, such color or font. The element's font size can also be passed on. Inheritance is a way for a descendant to inherit the parent's property value. This is an important feature in web design. You can make your site appear the same as the original by using a new type or text. Another benefit of CSS? It can help you save time. CSS can apply a style to all HTML element styles, unlike HTML. CSS can modify the style of one element, but it cannot apply that style to all elements. Your website will load faster. CSS is a quick way to make global changes on your Web pages. It is possible to use the same style on different pages. This is extremely helpful for websites with multiple sites. CSS provides several selectors. The id will give an element's hyperlink. The property's value should be an integer. It should not exceed 255. Capitalize the property's value. Inline styles, although they do not alter the browser's appearance or display of elements on a webpage, can have an impact on how they are displayed.

0 notes

Text

7 Web Design Ideas That Can Impress Your Friends.

The CSS Beginner's Guide CSS is essential if you are interested in the World Wide Web. It is a vital technology for JavaScript web and the World Wide Web. CSS is a stylesheet that defines how HTML documents should look. There are many styles that can be applied HTML documents. Each style has its own unique look and feel. You can find more information on CSS here. This article will provide an overview of CSS and show you how to use it to increase the performance of your website. Hakon Wium Lie, a Norwegian programmer, was a close friend of Sir Tim Berners Lee who invented HTML and World Wide Web. He established CSS in 1996. In 1994, Hakon Wium-Lie proposed the idea of cascading stylesheets. While other style sheets languages were also suggested at the time, CSS was adopted by W3C in 1996. It became a common style sheet language and a standard in web design. CSS gives you more control over your presentation. You can adjust the spacing between paragraphs and background images. These features make it easier to create visually appealing web pages. CSS is easy to use, so it's worth spending the time to get to know the basics. Start your CSS journey by downloading the free trial version. After you have used it, you can modify the appearance and functionality on your website. The W3C published the first CSS version in 1996. In 1996, the World Wide Web Consortium released the first version of CSS. The World Wide Web Consortium also added CSS to its editorial board. Netscape introduced JavaScript Style Sheets in 1995. The W3C was very interested in CSS development. It is also a popular way to add new features to websites. You can create complex web pages with the latest CSS version. The free CSS guide will help you learn more. The CSS specification describes how styles can be applied. Once a user selects an element, the CSS specification changes its appearance. The element can only be selected by a user agent if the name matches the attribute. A pseudo-element is an element with an effect on all elements. A user can click on an element to view a particular area of the page. CSS is another important standard for web. CSS allows web designers to create consistent pages. A single style CSS can be used to create a page. Because of its popularity, many developers have created mobile-friendly websites. This technology is not only superior to other competitors, but it has become a necessity in web design. These tools allow you to create mobile-friendly websites. The future is now. CSS's most important feature is its inheritance. Ancestors' characteristics, such as color and font, can be passed on to descendants. A factor such as font size can be passed to descendents of the same element. A descendant element can inherit the parent's property values through inheritance. This is a great benefit for web design. This can be very helpful if you use a new type of text for your website. Another advantage of CSS is its ability to save time. CSS can be used to apply the same style to all HTML elements. HTML cannot do this. CSS can change the style of any element. Your website will load quicker. CSS makes it easy to make changes to all pages. The same style can be used across multiple pages. This is especially useful for sites with multiple websites. CSS can be used with several selectors, including id class and id as well as a value. An element's link will be provided by the id. The property's value is an integer. It should be between zero and 255. Capitalizing the value of the property is a good idea. Inline styles aren't able to alter the browser's appearance but can influence how elements appear on a webpage.

0 notes

Text

How To Own Web Design For Free.